Text2Light: Zero-Shot Text-Driven HDR Panorama Generation

TOG 2022 (Proc. SIGGRAPH Asia)

- Zhaoxi Chen

- Guangcong Wang

- Ziwei Liu Nanyang Technological University

Abstract

High-quality HDRIs (High Dynamic Range Images), typically HDR panoramas, are one of the most popular ways to create photorealistic lighting and 360-degree reflections of 3D scenes in graphics. Given the difficulty of capturing HDRIs, a versatile and controllable generative model is highly desired, where laymen users can intuitively control the generation process. However, existing state-of-the-art methods still struggle to synthesize high-quality panoramas for complex scenes. In this work, we propose a zero-shot text-driven framework, Text2Light, to generate 4K+ resolution HDRIs without paired training data. Given a free-form text as the description of the scene, we synthesize the corresponding HDRI with two dedicated steps: 1) text-driven panorama generation in low dynamic range (LDR) and low resolution (LR), and 2) super-resolution inverse tone mapping to scale up the LDR panorama both in resolution and dynamic range. Extensive experiments demonstrate the superior capability of Text2Light in generating high-quality HDR panoramas. In addition, we show the feasibility of our work in realistic rendering and immersive VR.

Framework

We decompose the generation process of HDR panorama into two stages. Stage I translates the input text to LDR panorama based on a dual-codebook discrete representation. First, the input text is mapped to the text embedding by the pre-trained CLIP model. Second, a text-conditioned global sampler learns to sample holistic semantics from the global codebook according to the input text. Then, a structure-aware local sampler synthesizes local patches and composites them accordingly. Stage II upscales the LDR result from Stage I based on structured latent codes as continuous representations. We propose a novel Super-Resolution Inverse Tone Mapping Operator (SR-iTMO) to simultaneously increase the spatial resolution and dynamic range of the panorama.

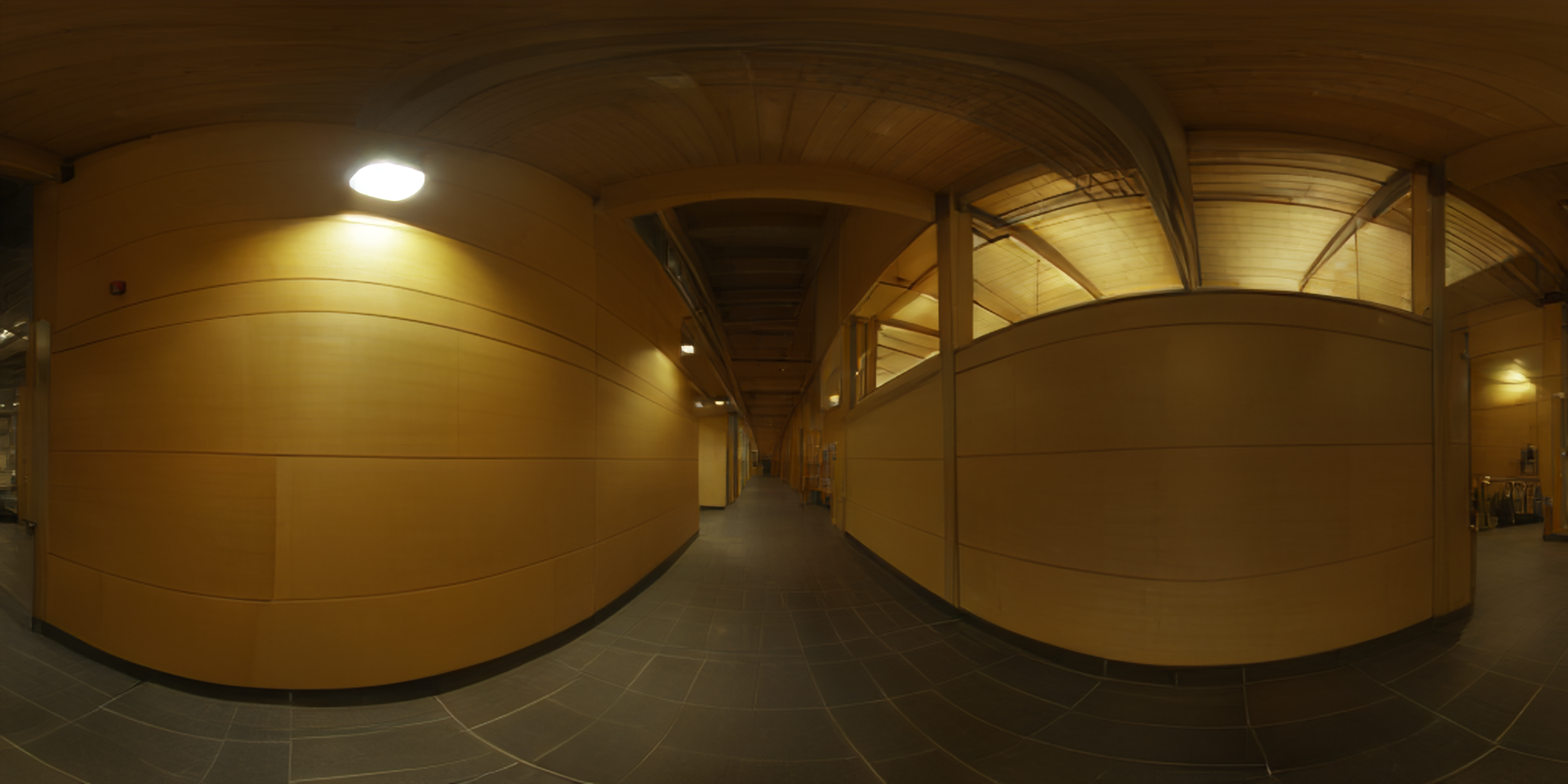

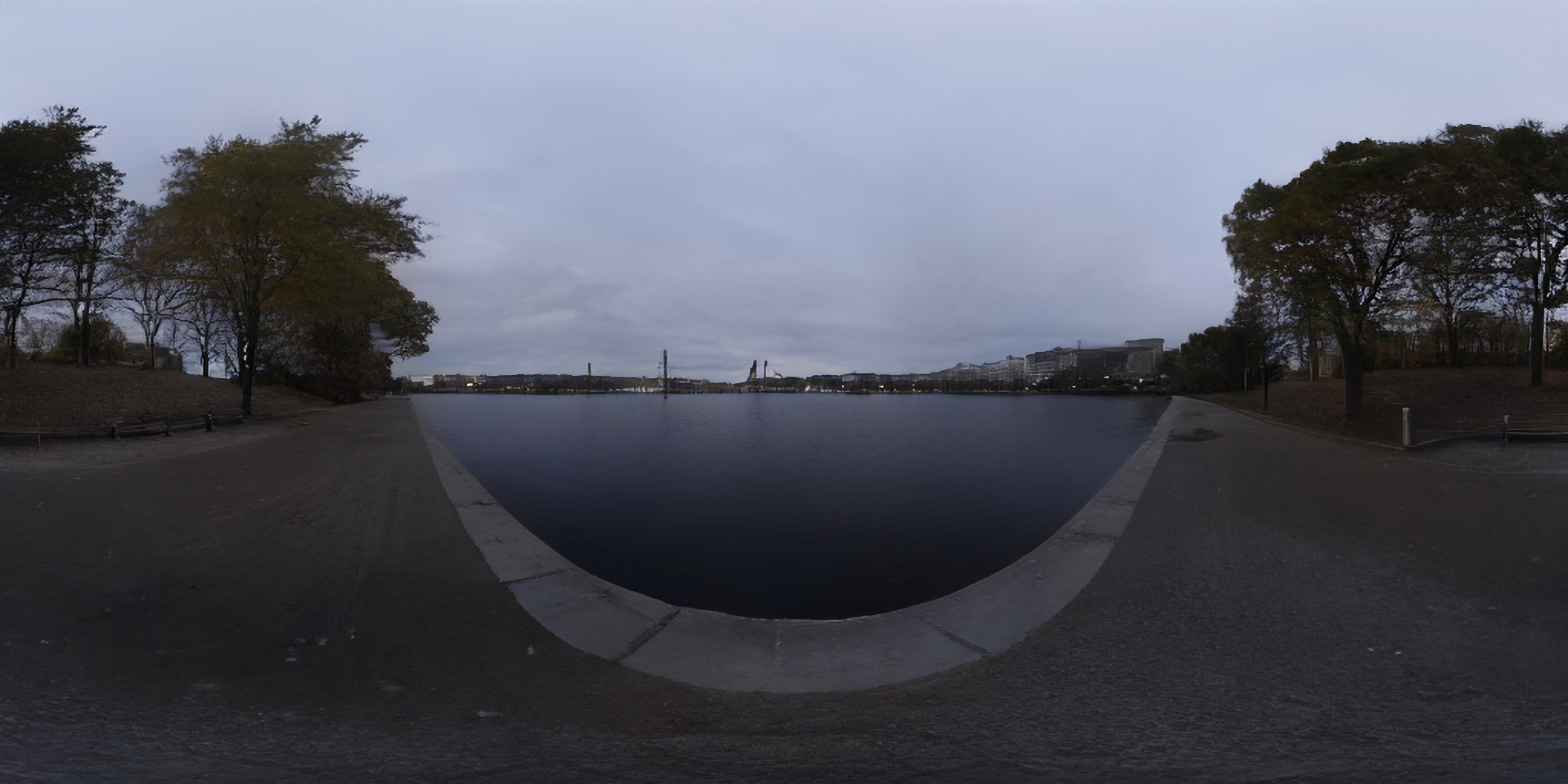

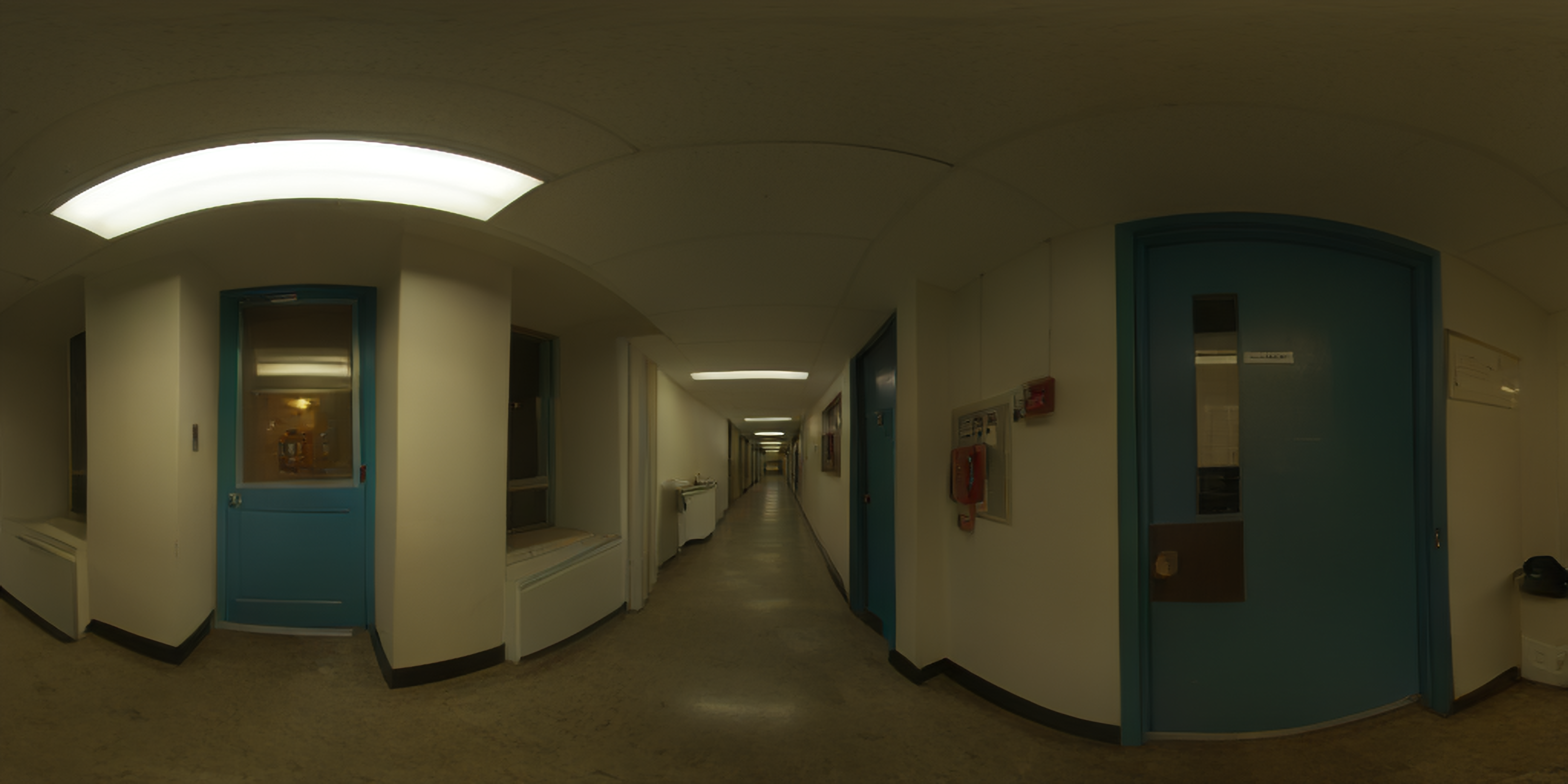

HDRI Gallery

Hover to view in VR mode. Click the text to open the full viewer and renderer.

Video

Citation

Related Links

StyleLight generates HDR indoor panorama from a limited FOV image.

AvatarCLIP proposes a zero-shot text-driven framework for 3D avatar generation and animation.

Text2Human proposes a text-driven controllable human image generation framework.

Relighting4D can relight human actors using the HDRI generated by us.

blender-cli-rendering offers code snippets for rendering 3D assets via command line.

Acknowledgements

This work is supported by the National Research Foundation, Singapore under its AI Singapore Programme, NTU NAP, MOE AcRF Tier 2 (T2EP20221-0033), and under the RIE2020 Industry Alignment Fund - Industry Collaboration Projects (IAF-ICP) Funding Initiative, as well as cash and in-kind contribution from the industry partner(s).

The training data are collected from multiple sources, including Poly-Haven, iHDRI, HDRI Skies, HDRMaps, Laval indoor dataset, Laval outdoor dataset.

Thanks to Fangzhou Hong, Yuming Jiang, and Jiawei Ren for proofreading the paper.

The website template is borrowed from Mip-NeRF.